NITDedupBenchmark, a Test Tool for Storage Systems with Data-Deduplication

This tool is a test program for storage systems and can generate and measure real workloads. This also for an SSD or a hardware based storage. However, I have made this tool for data deduplicated file systems. Data deduplication and storage virtualization or “Software defined Storage” for storage systems is a new trend in the storage market. Data deduplicating storage systems are especially suitable for VDI (Virtual Desktop Infrastructure) environments, since they reach a very high data-deduplication rate. Likewise for cloud environments with virtual servers that posses a high storage redundancy. A further advantage is that in particular the Read IOPS are greatly increased when a deduplicated datablock only needs to be read once. The deduplication of the data means both that processor performance will be required and that most of the time a really large memory cache will be used as well.

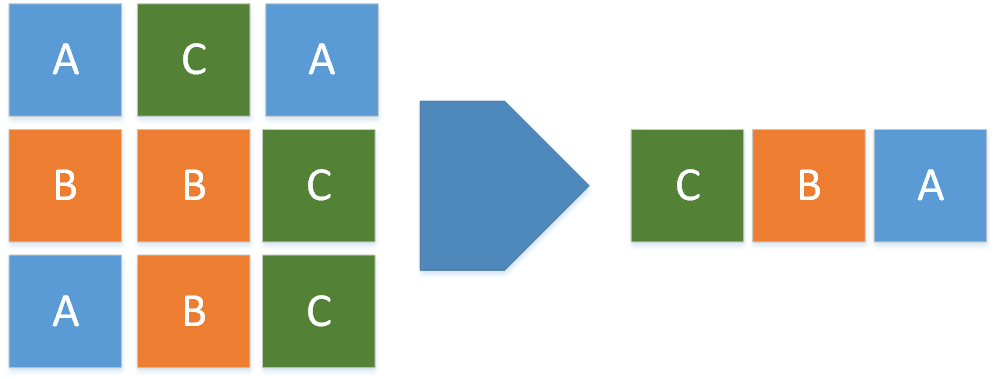

In-line data duplication can be imagined such that several available data blocks in real time are recognized on the memory device prior to writing and as an ultimate consequence must not always be written.

I posed myself the question, what happens when a file server is located in a virtual storage system and data is stored on the file server that is difficult to compress, for example video footage? A device must then work hard in order to continue to achieve a high write rate. Manufacturers' benchmarks always look good. But is, perhaps, the correct random distribution missing? Or were no long-term tests conducted for the benchmark results? What happens after an hour? Therefore I developed a new tool (benchmark) that can define what percent of the written data can be deduplicated. Additionally it is easy to use. A customer doesn't need training, such as is the case with complex tools (e.g. IOMeter), in order to test out his own environment. The test writes onto the memory device several terabytes of generated random numbers in real time. The tool is free to use.

Furthermore all data remains on the hard drive during the test. In the future the writing test should be expanded with a reading test. This gets interesting especially with hybrid storage systems (SSD cache with local NAS) when the SSD- and RAM caches are no longer sufficient and the NAS needs to be read. The NITDedupBenchmark should be a realistic test for such systems and should protect customers from a possible wrong purchase. We recommend testing with the tool and seeing what happens and will gladly advise on what is meaningful and what isn't.

First the legal information:

EXCLUSION FROM LIABILITY: USE OCCURS AT YOUR OWN RISK. NICK INFORMATIONS UND ANDREAS NICK ARE NOT LIABLE UNDER ANY CIRCUMSTANCES FOR DAMAGES TO HARDWARE OR TO SOFTWARE, LOST DATA OR OTHER DIRECT OR INDIRECT DAMAGES THAT RESULT AS A USE OF THIS SOFTWARE. THIS IS A BETA SOFTWARE. USE ONLY IN A TEST ENVIRONMENT.

A personal request to the community:

I request that the community sends me its test results and test scenarios. Especially test results at maximum loads with information about the hardware. And especially with which hardware and software configurations crashes or disruptions occur. Further I request that in the comments to this blog that no developers are mentioned. Please send me additional recommendations towards the improvement of the tool.

Function and use

This test calculates the random numbers in the write cycle. The results are cleaned after the fact. Especially difficult is a correct computation during multi-threaded computations. Up to 5000 cycles can be run when the corresponding space is made available on the hard drive. The test works completely with “unbuffered writes”. This means that during writing the Windows Cache is deactivated. The test is always carried out in a virtual machine. As such one must absolutely provide enough processing power (CPU cores) during a multi-thread. At best is one core unit per thread. Incidentally the test also functions well with local hard drives and SSD hard drives.

Requirements:

- At least Server 2008 R2, Windows 7/8.1/10 and Server 2012 (all 64 Bit)

.NET Framework 4.0 (better with 4.5) https://www.microsoft.com/en-US/download/details.aspx?id=17718 - Powershell 3.0 (better with 5.0) https://www.microsoft.com/en-us/download/details.aspx?id=34595

The NITDedupBenchmark writes data with one or more threads onto the hard drive (1). The hard drive is the first item to select.

Option (2) concerns test parameters. Data size per cycle, block size per cycle, block size (what is written per operation) and how many threads used simultaneously for writing.

Option (3), “Test Cycles”, selects how often the test is to be repeated. Because the data remains on the hard drive during the test, the required storage space is displayed under (7). If this becomes too large, the field will be displayed in red. Via “Dedup possible” (4) one can choose the possible dedup rate of the data. At 50%, random numbers will be generated at 50%. At 20% dedup, random numbers will be generated at 80%, because only 20% of the data should be deduplicatable.

Via “Start” (5) the test will be initiated. After starting a “Cancel” button appears. The test can be stopped at any time via the “Cancel” button. It can however take some time until the test is ended after pressing “Cancel”.

You can gladly connect with me using the social network buttons (8). I look forward to each message and to exchanging experiences with you.

Test examples

The following measurements show first a test with a local SSD (Solid State Drive), then a test is conducted with a data-deduplicated file system with an all-flash drive. No developers or manufacturers will be mentioned here. It gets interesting when the cache becomes full and data is deleted from the test drive. If the previously written blocks are not “reset” the hard drive appears to be empty, however the previously written blocks are still being held on the corresponding device and take up resources. The significance of this will be explained in the corresponding measurement lines.

NOTICE: For the multithread tests it is important to assign multiple kernels to the virtual machine and sufficient RAM. These resources will be used very quickly.

NOTICE: No developer will be mentioned in this article. This particularly because I still do not have much experience with the tool and because I am hoping for the manufacturer to come around in my current attempt to assist a customer. The tests here occur in an independent environment.

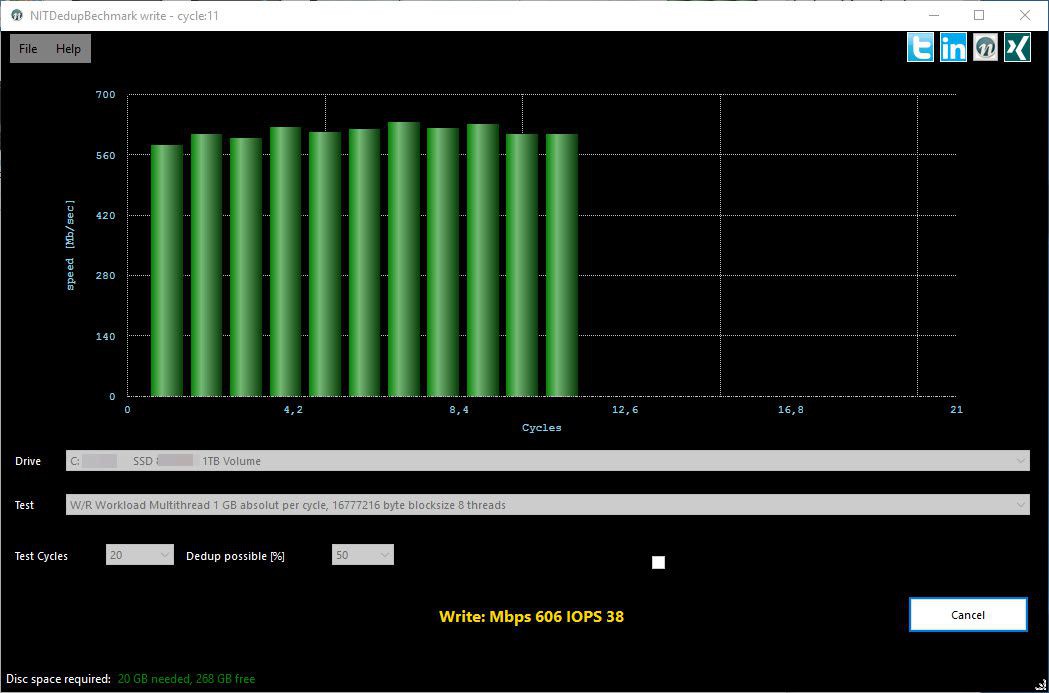

Local SSD, 50% Dedup, 100 Cycles, 8 Threads (100 GB)

First a test with a local SSD, in order to recognize the differences later between a deduplicated “all-flash” drive and an SSD.

The CPU has a lot to do in the Heavy Test with 8 threads:

Dedup File System (NTFS), „All Flash“, New Hard Drive, 50% Dedup, 100 Cycles, 100GB

For a true “Heavy Test”, the virtual machine running the test needs to have access to the corresponding resources. For the 16 thread test the machine should be assigned 16 cores. Since NTFS and thin provisioning are often utilized, we take a completely new hard drive. Then the blocks have never been used.

Since we're still working with a beta, no manufacturers are mentioned here.

And the same test with other hardware and an new version of the software and a differend blocksize

Dedup File System (NTFS), „All Flash“, 5% Dedup, 8 Threads, 100 Cycles

The admin work continues to increase. Almost every block must be completely written. The performance is on the level of a good USB flash drive.

Results of this example

As expected the write rate sinks with a lower dedup rate.

After some time of continuous overwriting, even with new, empty drives, the storage system reacts sluggishly. Even with 50% dedup, fast data throughput is no longer achieved. Perhaps a full data bank? Such a symmetrical data throughput such as in the first test with 50% dedup does not even occur anymore at 70% dedup. Even resetting snapshots and remounting the drive was done. Only after deleting the volume (the disk) and remounting the drive did the results improve. The problem may also lie with the SSD drives. They become slower after heavy usage.

For a test series the cache of the device should first be filled with data. Only then do realistic results of performance under load occur.

Summary: Everyone planning to acquire such a system must accurately determine whether the advantages outweigh the disadvantages. Such as high performance and limited data usage against possible variance in read and write access.

With the tool NITDedupBenchmark tests of an environment are easy for every possible customer. The tool can possibly cause damage, since a heavy workload is created. Therefore, use only in a test environment and not with actual data. Test sequences with 100 or more cycles are recommended.

Please send me exactly which manufacturers and configurations you used along with your test results, as well as information regarding possible crashes and data loss. For the sake of comparison

And a the read-test in the version 0.8:

(Dedup 50% 200 Cycles)

Download:

NITDedupBenchmark

NITDedupBenchmark

Version 0.85

Andreas Nick 2016

Comments